Access and Use Tongyi DeepResearch 30B A3B via OpenRouter using API Key

Access and Use tongyi-deepresearch-30b-a3b via OpenRouter

Tongyi DeepResearch is an agentic large language model developed by Tongyi Lab, with 30 billion total parameters activating only 3 billion per token. It's optimized for long-horizon, deep information-seeking tasks and delivers state-of-the-art performance on benchmarks like Humanity's Last Exam, BrowserComp, BrowserComp-ZH, WebWalkerQA, GAIA, xbench-DeepSearch, and FRAMES. This makes it superior for complex agentic search, reasoning, and multi-step problem-solving compared to prior models.

The model includes a fully automated synthetic data pipeline for scalable pre-training, fine-tuning, and reinforcement learning. It uses large-scale continual pre-training on diverse agentic data to boost reasoning and stay fresh. It also features end-to-end on-policy RL with a customized Group Relative Policy Optimization, including token-level gradients and negative sample filtering for stable training. The model supports ReAct for core ability checks and an IterResearch-based 'Heavy' mode for max performance through test-time scaling. It's ideal for advanced research agents, tool use, and heavy inference workflows.

Tongyi DeepResearch 30B A3B Overview

| Full Name | Tongyi DeepResearch 30B A3B |

| Provider | Tongyi DeepResearch 30B A3B |

| Model ID | alibaba/tongyi-deepresearch-30b-a3b |

| Release Date | Sep 18, 2025 |

| Context Window | 131,072 tokens |

| Pricing /1M tokens | $0 for input $0 for output |

| Supported Input Types | text |

| Supported Parameters | frequency_penaltyinclude_reasoningmax_tokensmin_ppresence_penaltyreasoningrepetition_penaltyresponse_formatseedstopstructured_outputstemperaturetool_choicetoolstop_ktop_p |

Complete Setup Guide

Create OpenRouter Account

- Visit openrouter.ai

- Click "Sign In" and create an account (free)

- Verify your email address

- You'll receive $1 in free credits to test models

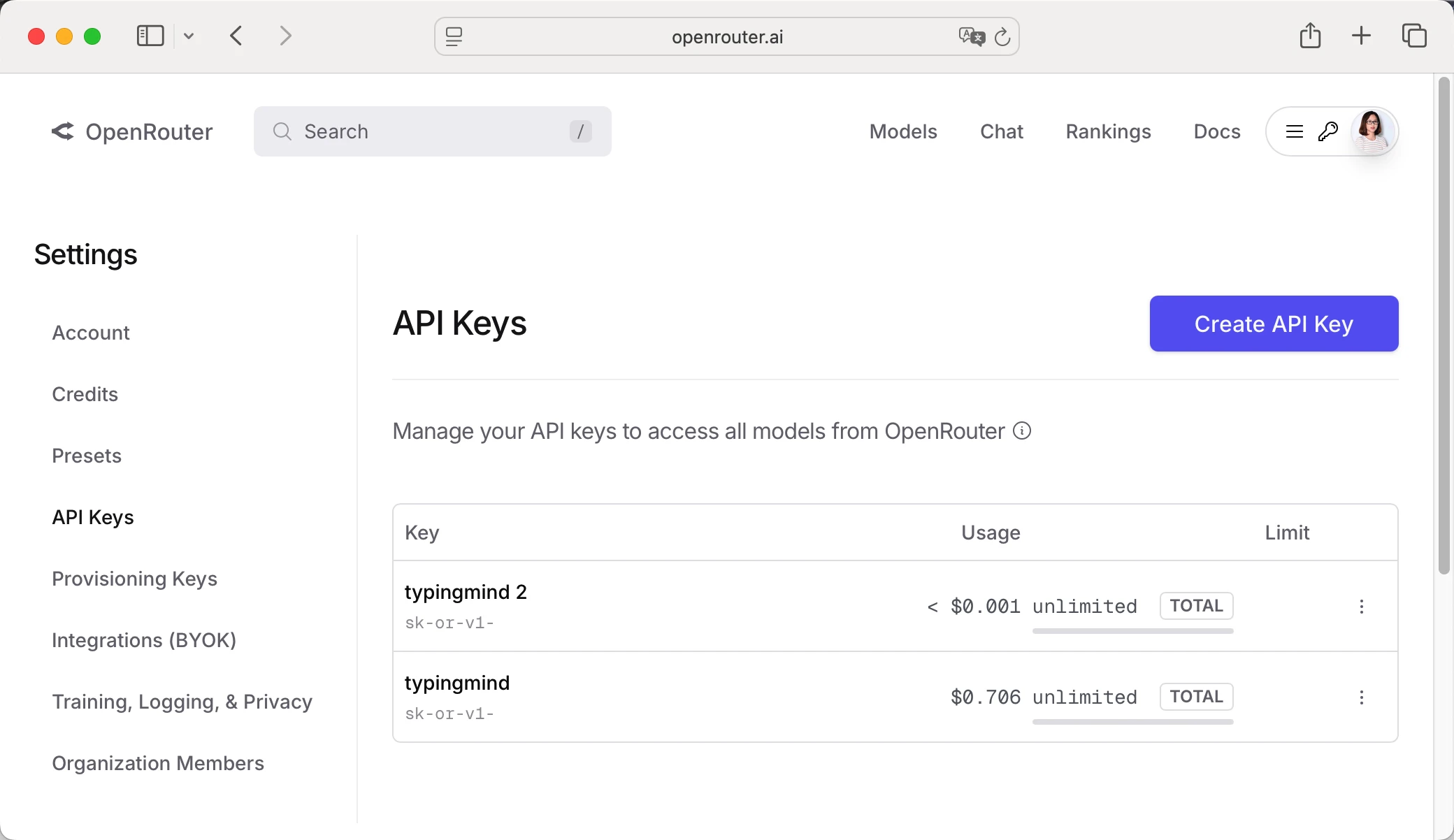

Get Your OpenRouter API Key

- Log in to OpenRouter dashboard

- Go to "API Keys" section in the menu

- Click "Create API Key"

- Give it a name (e.g., "TypingMind")

- Copy your API key (starts with "sk-or-v1-...")

Add Credits to OpenRouter (Optional)

- Go to "Credits" in OpenRouter dashboard

- Click "Add Credits"

- Choose amount ($5 minimum, $20 recommended for testing)

- Complete payment (credit card or crypto)

- Credits never expire!

Configure TypingMind with OpenRouter API Key

Method 1: Direct Import (Recommended)

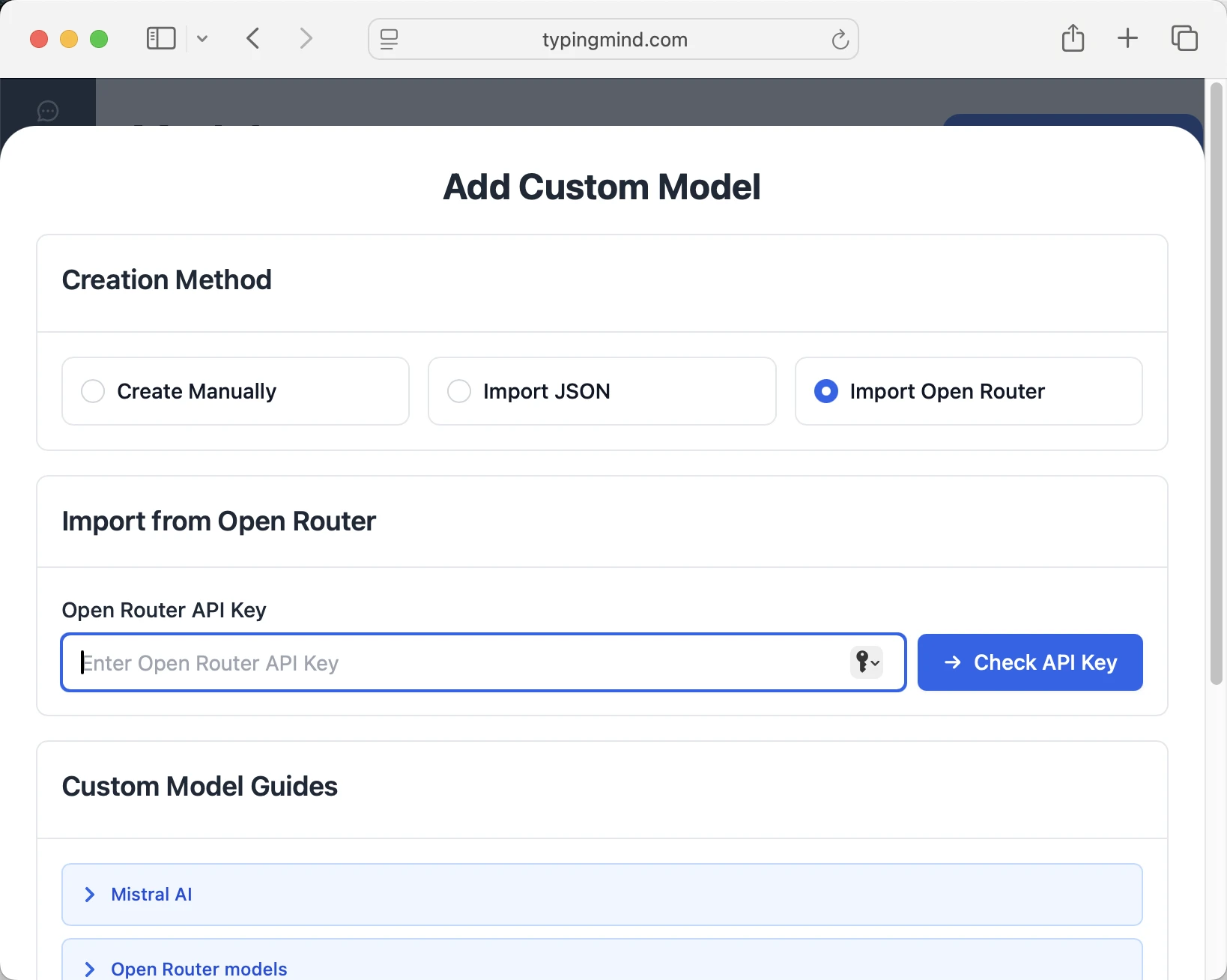

- Open TypingMind in your browser

- Click the "Settings" icon (gear symbol)

- Navigate to "Manage Models" section

- Click "Add Custom Model"

- Select "Import OpenRouter" from the options

- Enter your OpenRouter API key from Step 1

- Click "Check API Key" to verify the connection

- Choose which models you want to add from the list (you can add multiple at once)

- Click "Import Models" to complete the setup

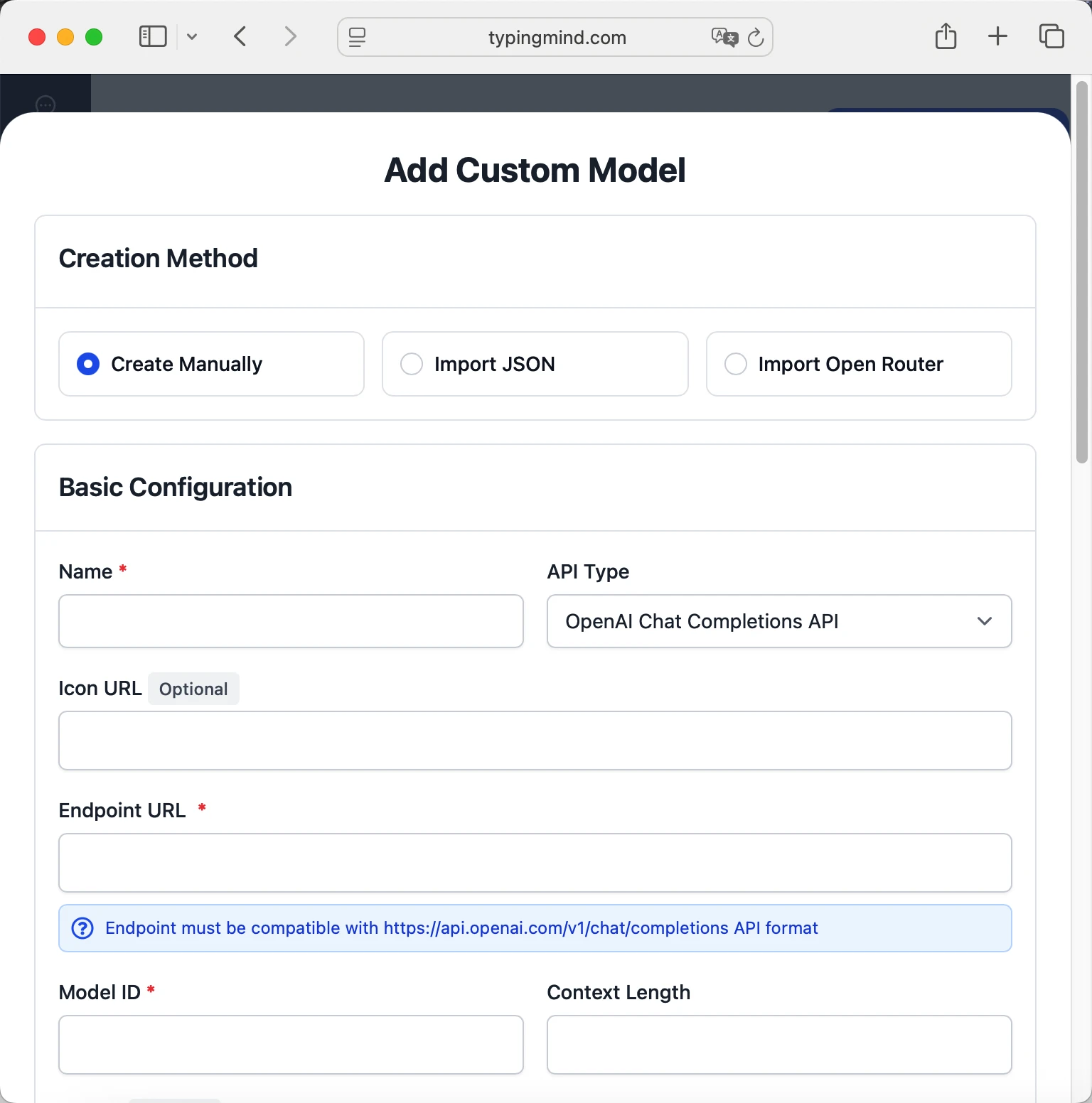

Method 2: Manual Custom Model Setup

- Open TypingMind in your browser

- Click the "Settings" icon (gear symbol)

- Navigate to "Models" section

- Click "Add Custom Model"

- Fill in the model information:Name:

alibaba/tongyi-deepresearch-30b-a3b via OpenRouter(or your preferred name)Endpoint:https://openrouter.ai/api/v1/chat/completionsModel ID:alibaba/tongyi-deepresearch-30b-a3bContext Length: Enter the model's context window (e.g., 131072 for alibaba/tongyi-deepresearch-30b-a3b) alibaba/tongyi-deepresearch-30b-a3bhttps://openrouter.ai/api/v1/chat/completionsalibaba/tongyi-deepresearch-30b-a3b via OpenRouterhttps://www.typingmind.com/model-logo.webp131072

alibaba/tongyi-deepresearch-30b-a3bhttps://openrouter.ai/api/v1/chat/completionsalibaba/tongyi-deepresearch-30b-a3b via OpenRouterhttps://www.typingmind.com/model-logo.webp131072 - Add custom headers by clicking "Add Custom Headers" in the Advanced Settings section:Authorization:

Bearer <OPENROUTER_API_KEY>:X-Title:typingmind.comHTTP-Referer:https://www.typingmind.com - Enable "Support Plugins (via OpenAI Functions)" if the model supports the "functions" or "tool_calls" parameter, or enable "Support OpenAI Vision" if the model supports vision.

- Click "Test" to verify the configuration

- If you see "Nice, the endpoint is working!", click "Add Model"

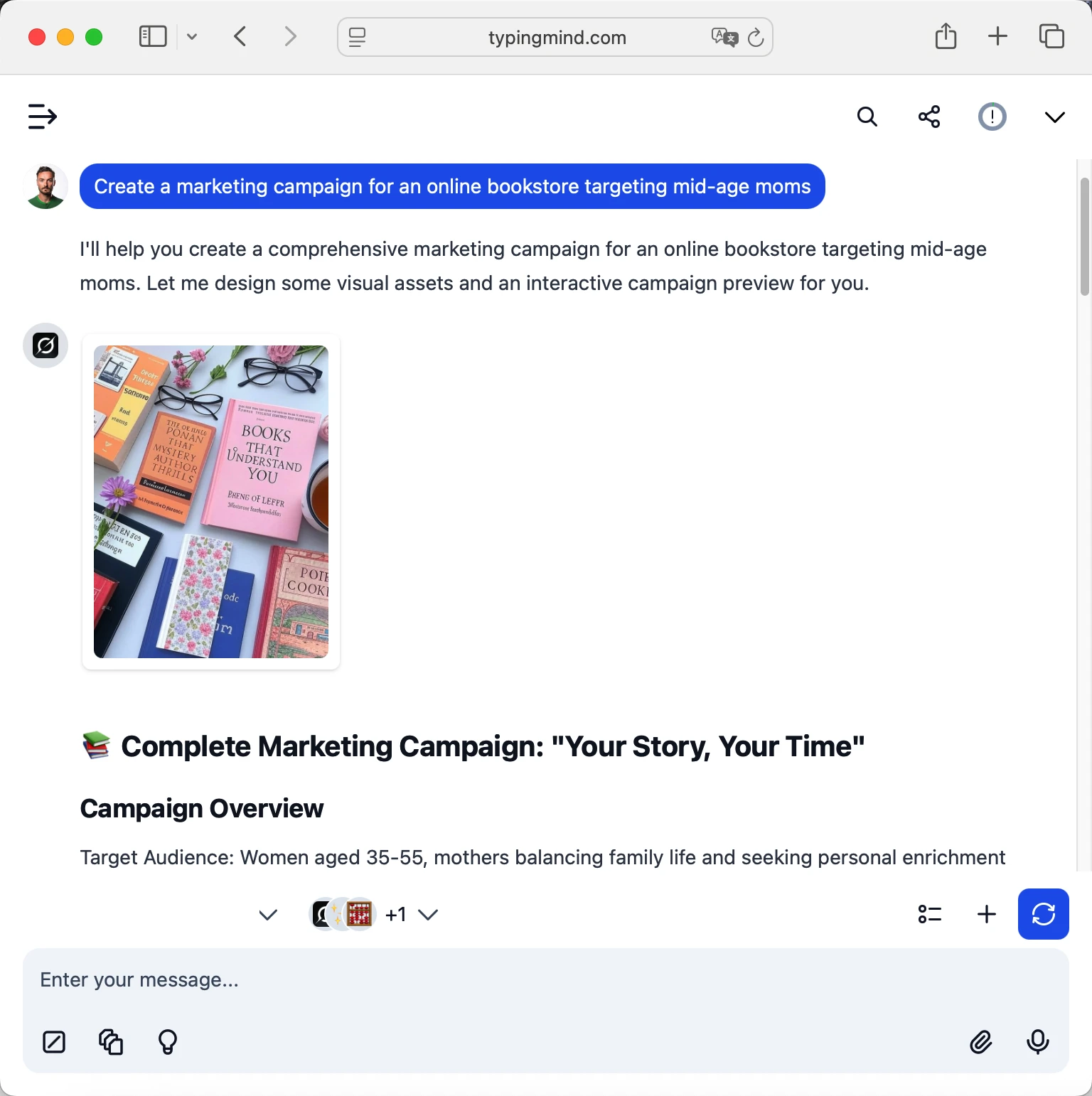

Start chatting with alibaba/tongyi-deepresearch-30b-a3b

Now you can start chatting with the alibaba/tongyi-deepresearch-30b-a3b model via OpenRouter on TypingMind:

- Select your preferred alibaba/tongyi-deepresearch-30b-a3b model from the model dropdown menu

- Start typing your message in the chat input

- Enjoy faster responses and better features than the official interface

- Switch between different AI models as needed

alibaba/tongyi-deepresearch-30b-a3b

alibaba/tongyi-deepresearch-30b-a3b

Pro tips for better results:

- Use specific, detailed prompts for better responses (How to use Prompt Library)

- Create AI agents with custom instructions for repeated tasks (How to create AI Agents)

- Use plugins to extend alibaba/tongyi-deepresearch-30b-a3b capabilities (How to use plugins)

- Upload documents and images directly to chat for AI analysis and discussion (Chat with documents)

Why TypingMind + OpenRouter?

- Best-in-class UI: TypingMind's interface is far superior to standard chat UIs

- Model flexibility: Switch between Tongyi DeepResearch 30B A3B and 200+ models instantly

- Cost control: Pay only for what you use through OpenRouter

- One-time purchase: Buy TypingMind once, use forever with any OpenRouter model

- Data privacy: Your conversations stored locally, not on external servers

Frequently Asked Questions

Do I need a subscription to use Tongyi DeepResearch 30B A3B?

No! Through OpenRouter, you pay only for what you use with no monthly subscription. Add credits to your OpenRouter account and they never expire. TypingMind is also a one-time purchase, not a subscription.

How much will it cost to use Tongyi DeepResearch 30B A3B?

It costs 0.00008999999999999999 for input and 0.00039999999999999996 for output via OpenRouter. A typical conversation might cost $0.01-0.10 depending on length. Start with $5-10 in credits to test.

Can I use other models besides Tongyi DeepResearch 30B A3B?

Yes! With OpenRouter + TypingMind, you get access to 200+ models including GPT-4, Claude, Gemini, Llama, Mistral, and many more. Switch between them instantly in TypingMind.

Is my data private and secure?

Yes! TypingMind stores conversations locally (web version in browser, desktop version on your device). OpenRouter handles API calls securely and doesn't train on your data. Check each provider's data policy for specifics.

Can I use Tongyi DeepResearch 30B A3B for commercial projects?

Yes! Check Tongyi DeepResearch 30B A3B's terms of service for specific commercial use policies. OpenRouter and TypingMind both support commercial use.

What if Tongyi DeepResearch 30B A3B is unavailable?

OpenRouter allows you to configure fallback models. If Tongyi DeepResearch 30B A3B is down, it can automatically route to your backup choice. You can also manually switch models in TypingMind anytime.

How do I cancel or get a refund?

OpenRouter: No subscriptions to cancel. Unused credits remain in your account forever.