How to use Synthetic API Key for AI chat

Synthetic

SyntheticAbout Synthetic

Synthetic.new is a privacy-focused AI platform offering private access to multiple open-source LLMs through simple flat-rate subscriptions starting at $20/month for 125 requests per 5 hours or $60/month for 1250 requests.

The platform provides access to 19+ always-on models including Llama 3 variants with up to 128K token context windows, specialized coding models, and task-specific LoRA adapters, with guaranteed privacy through no training on user data and automatic deletion within 14 days.

Key features include OpenAI-compatible API for integration with tools like Roo, Cline, and Octofriend, web-based chat interface, on-demand model launching from Hugging Face repositories on cloud GPUs with separate per-minute billing, predictable pricing without per-token charges, and support for large context coding tasks.

The platform prioritizes developer workflows and code generation with strong privacy guarantees and cost-effective access to powerful open-source models.

Step by step guide to use Synthetic API Key to chat with AI

1. Get Your Synthetic API Key

First, you'll need to obtain an API key from Synthetic. This key allows you to access their AI models directly and pay only for what you use.

- Visit Synthetic's API console

- Sign up or log in to your account

- Navigate to the API keys section

- Generate a new API key (copy it immediately as some providers only show it once)

- Save your API key in a secure password manager or encrypted note

2. Connect Your Synthetic API Key on TypingMind

Once you have your Synthetic API key, connecting it to TypingMind to chat with AI is straightforward:

- Open TypingMind in your browser

- Click the "Settings" icon (gear symbol)

- Navigate to "Models" section

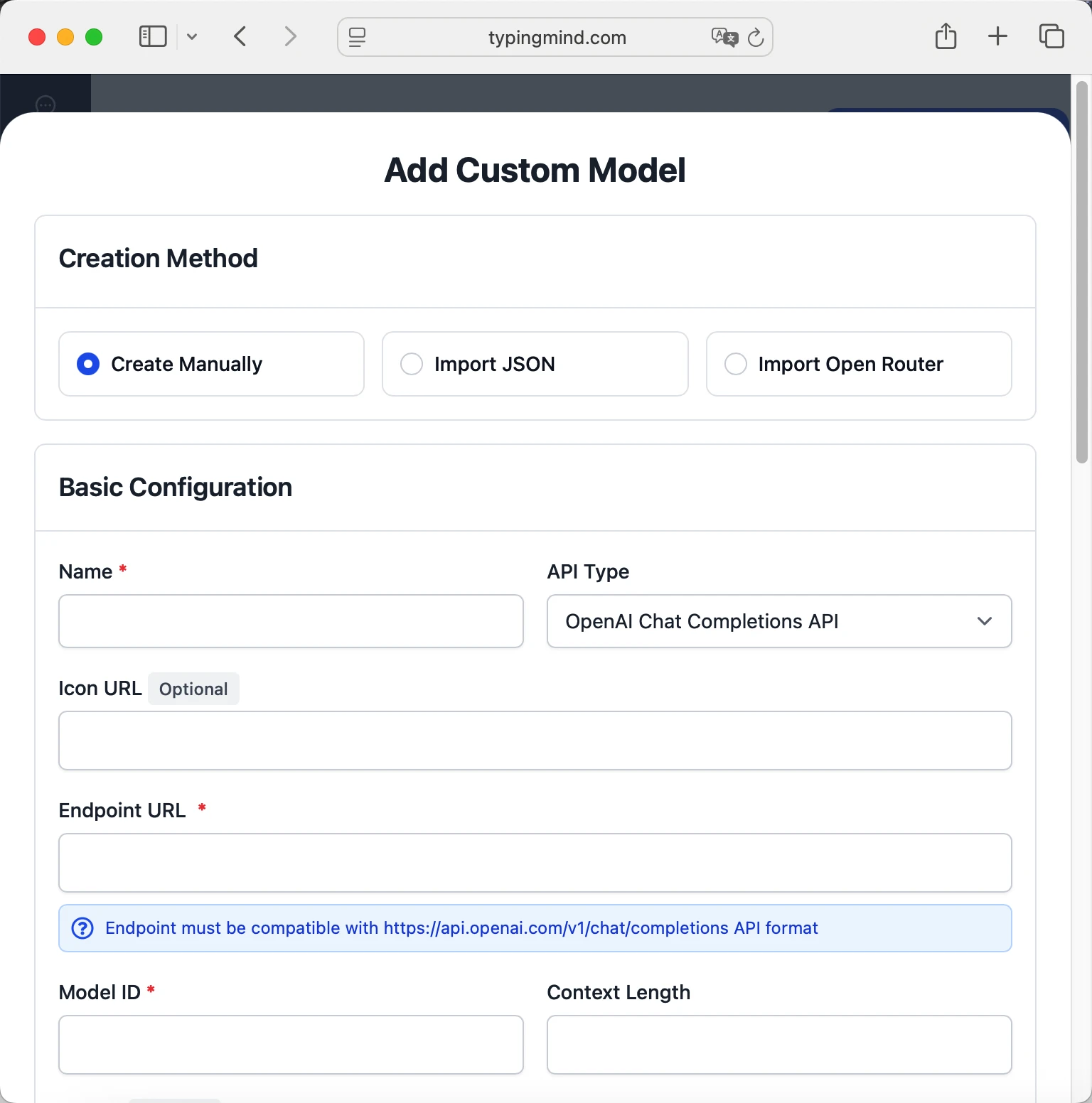

- Click "Add Custom Model"

- Fill in the model information:Name:

hf:meta-llama/Llama-3.1-70B-Instruct via Synthetic(or your preferred name)Endpoint:https://api.synthetic.new/openai/v1/chat/completionsModel ID:hf:meta-llama/Llama-3.1-70B-Instructfor example (check Synthetic model list)Context Length: Enter the model's context window (e.g., 32000 for hf:meta-llama/Llama-3.1-70B-Instruct) hf:meta-llama/Llama-3.1-70B-Instructhttps://api.synthetic.new/openai/v1/chat/completionshf:meta-llama/Llama-3.1-70B-Instruct via Synthetichttps://www.typingmind.com/model-logo.webp32000

hf:meta-llama/Llama-3.1-70B-Instructhttps://api.synthetic.new/openai/v1/chat/completionshf:meta-llama/Llama-3.1-70B-Instruct via Synthetichttps://www.typingmind.com/model-logo.webp32000 - Add custom headers by clicking "Add Custom Headers" in the Advanced Settings section:Authorization:

Bearer <SYNTHETIC_API_KEY>:X-Title:typingmind.comHTTP-Referer:https://www.typingmind.com - Enable "Support Plugins (via OpenAI Functions)" if the model supports the "functions" or "tool_calls" parameter, or enable "Support OpenAI Vision" if the model supports vision.

- Click "Test" to verify the configuration

- If you see "Nice, the endpoint is working!", click "Add Model"

3. Start Chatting with Synthetic models

Now you can start chatting with Synthetic models through TypingMind:

- Select your preferred Synthetic model from the model dropdown menu

- Start typing your message in the chat input

- Enjoy faster responses and better features than the official interface

- Switch between different AI models as needed

hf:meta-llama/Llama-3.1-70B-Instruct

hf:meta-llama/Llama-3.1-70B-Instruct

- Use specific, detailed prompts for better responses (How to use Prompt Library)

- Create AI agents with custom instructions for repeated tasks (How to create AI Agents)

- Use plugins to extend Synthetic capabilities (How to use plugins)

- Upload documents and images directly to chat for AI analysis and discussion (Chat with documents)

4. Monitor Your AI Usage and Costs

One of the biggest advantages of using API keys with TypingMind is cost transparency and control. Unlike fixed subscriptions, you pay only for what you actually use. Visit https://synthetic.new/billing to monitor your Synthetic API usage and set spending limits.

- Use less expensive models for simple tasks

- Keep prompts concise but specific to reduce token usage

- Use TypingMind's prompt caching to reduce repeat costs (How to enable prompt caching)

- Using RAG (retrieval-augmented generation) for large documents to reduce repeat costs (How to use RAG)